When using one-step \(TD\) or \(TD(0)\), the time step determines both how frequently the action can be changed and the time interval over which bootstrapping is performed.

Ideally, bootstrapping works best when conducted over a duration in which a significant and recognizable state change has occurred. N-step bootstrapping allows for this to happen across multiple time steps.

N-step TD prediction

N-step TD prediction involves using N-step updates, which are still considered TD methods because they update earlier estimates based on differences from later estimates. However, when these TD methods are extended over N steps, they become N-step TD methods.

In one-step updates, the target is the first reward plus the discounted estimated value of the next state. Thus, the one-step return is defined as:

\[G_{t:t+1} \cdot R_{t+1} + γ V_{t}(S_{t+1})\]For two-step updates, the return is calculated as :

\[G_{t:t+2} \cdot R_{t+1} + γ R_{t+2} +γ^{2} V_{t+1}(S_{t+2})\]For N-step updates, the return is computed as :

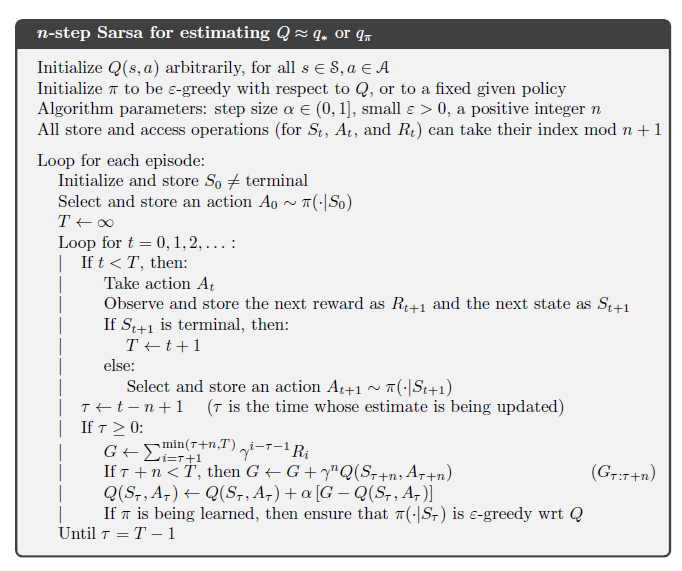

\[G_{t:t+n} \dot{=} R_{t+1} + γR_{t+2} + \cdots + γ^{n-1}R_{t+n} + γ^{n}V_{t+n-1}(S_{t+n})\]for all \(n,t\) such that \(n \ge 1\) and \(0 \le t \lt T-n\). Psuedo Code for n-step TD is given below:

Note: n-step returns for \(n \lt 1\) involve future rewards and states that are not available at the time of tansition from \(t\) to \(t+1\), but only after seeing \(R_{t+n}\) and computed \(V_{t+n-1}\). n-step return uses the value function, \(V_{t+n-1}\) to correct for the missing rewards beyond \(R_{t+n}\)

The natural state-value learning algorithm for using n-step returns is thus :

\[V_{t+n}(S_{t}) \dot{=} V_{t+n-1}(S_{t}) + α[G_{t:t+n} - V_{t+n-1}(S_{t})]\]while the values of all other states remain unchanged: \(V_{t+n}(S) = V_{t+n-1}(S)\), for all \(s ≠ S_{t}\). This algorithm is called n-step TD.

An important aspect of n-step return is that their expectation is guaranteed to be a better estimate of \(V_{\pi}\) than \(V_{t+n-1}\) is, in a worst-state sense. That is, worst error of the expected n-step return is guaranteed to be less than or equal to \(γ^{n}\) times the worst error under \(V_{t+n-1}\)

\[\max_{x}[\mathbb{E}_{\pi}[G_{t:t+n} ∣ S_{t} = s] - V_{\pi}(s) \le γ^{n} \max_{s}[V_{t+n-1}(s) - V_{\pi}(s)]]\]This is called error reduction property of n-step returns.

n-step SARSA

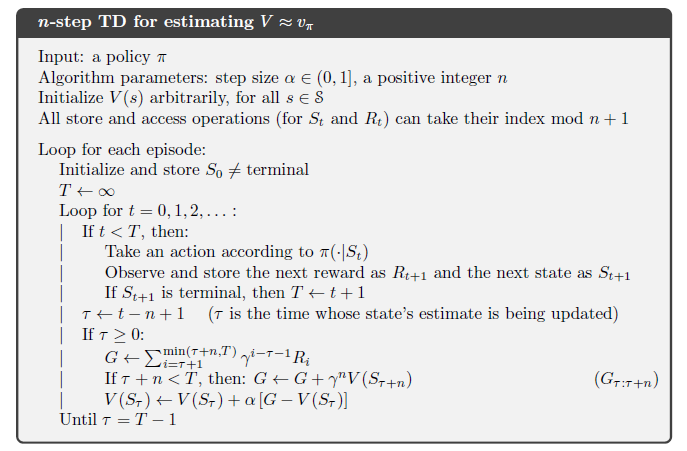

N-step of version of SARSA is called n-step SARSA. The main idea is to simply switch states for action (state-action pair) and then use \(∈ -\text{greedy}\) policy. Here, we redefine n-step returns (update targets) in terms of estimated action-values.

\[G_{t:t+n} \dot{=} R_{t+1} + γR_{t+2} + \cdots + γ^{n-1}R_{t+n} + γ^{n} Q_{t+n-1}(S_{t+n},A_{t+n})\]with \(G_{t:t+n} \dot{=} G_{t}\) if \(t+n \ge T\) . The natural algorithm is then :

\[Q_{t+n}(S_{t}, A_{t}) \dot{=} Q_{t+n-1}(S_{t},A_{t}) + α [G_{t:t+n} - Q_{t+n-1}(S_{t},A_{t})]\]with the values of all other states remain unchanged: \(Q_{t+n}(s,a) = Q_{t+n-1}(s,a)\). Pseudo Code for it is given below: