Encoder-Decoder

Encoder-Decoder architecture is based on Recurrent Neural Network(RNN). In RNN, we have a hidden state $h$ which takes a variable length sequence \(X = (x_{1},x_{2},\cdots, x_{T})\) to generate output \(y\). At any time step $t$, hidden state $h_{t}$ is updated by:

\[h_{t} = f(h_{t-1},x_{t})\]where \(f\) is a non-linear activation function. It can be element-wise sigmoid function or LSTM. Output at eah time step is the conditional distribution \(\displaystyle p(x_{t} \lvert x_{t-1},\cdots x_{1})\)

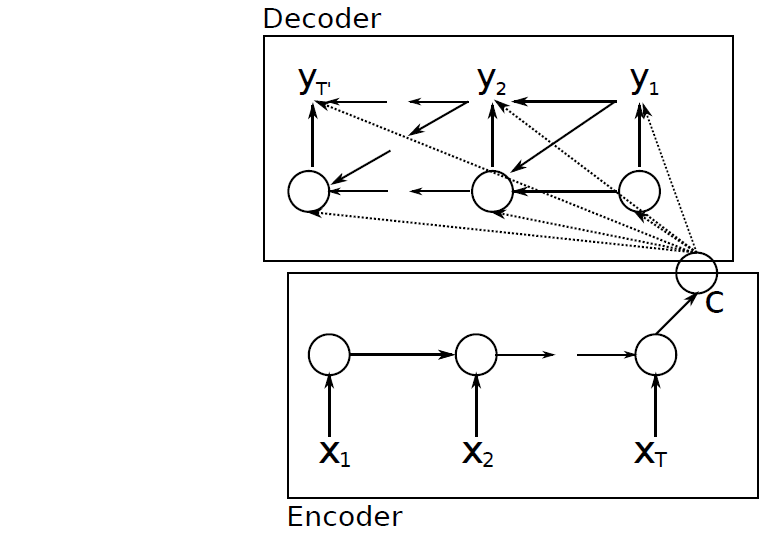

In Encoder-Decoder, we have a RNN encoder that encodes a variable-length sequence into a fixed-length vector, and a RNN decoder that decodes fixed-length vector back to variable-length sequence. Mathematically, it is a method to learn the conditional distribution over a variable-length sequence conditioned on yet another variable-length sequence e.g, \(P(y_{1},y_{2},\cdots,y_{T'} ∣ x_{1},x_{2},\cdots,x_{T})\), where importantly, input sequence $T$ and output sequence \(T^{'}\) could vary. After reading each input at all time steps, hidden state of encoder RNN is a summary \(C\) of whole input sequence as shown in image below :

RNN Encoder-Decoder Architecture

Next decoder generate the output sequence by predicting the next symbol \(y_{t}\). Here, in RNN decoder both \(y_{t}\) and \(h_{t}\) is conditioined on \(y_{t-1}\) and summary (or context vector) \(C\). Thus, hidden state of decoder can be computed by :

\[h_{t} = f(h_{t-1}, y_{t-1},C)\]Decoder defines a probability over the translation $y$ by decomposing the joint probability into the ordered conditional :

\[P(y) = \Pi_{t=1}^{T} p(y_{t} ∣ \left(y_{1},\cdots,y_{t-1}\right),c) \notag\]and the conditional distribution of the next symbol is (if \(g\) represents activation such as soft-max) :

\[P(y_{t} ∣ y_{t-1},y_{t-2},\cdots,y_{1},C) = g(h_{t},y_{t-1},C)\]This model of RNN Encoder-Decoder then jointly trained to maximize the conditional log-likelihood

\[\begin{equation} \max_{θ}\, \frac{1}{N} \, \sum\limits_{n=1}^{N} \text{log}\,\,p_{θ}(y_{n} \lvert X_{n}) \end{equation}\]where \(\theta\) is the set of the model parameters and each \((x_{n},y_{n})\) is an (input sequence, output sequence) pair from training set.

(Cho et al., 2014b) prposed to use LSTM (paper says it is LSTM but it is GRU, for having reset and update gates) in hidden state. When reset gate is close to $0$, hidden state is forced to ignore previous hidden state and continue with current input. This effectively allows the hidden state to drop any irrelevant information. Update gate, on the other hand, controls how much information from the previous hidden state get to pass on to next hidden state. Combination of these two gates, thus, allows more compact and improved representation of output sequence.

(Sutskever et al., 2014), however, proposed three changes :

- Two LSTM to be used - one for encoder, another for decoder.

- Deep LSTM should be used. LSTM with four layers is implemented.

- To reverse the order of input sequence. For instance, instead of mapping the input sequence \(a,b,c\) to \(α,\beta,\gamma\) it asked to map \(c,b,a\) to \(\alpha,\beta,\gamma\).

Bahdanau Model

Bahdanau model is based on RNN Encoder-Decoder that learns to align and translate simultaneously. This model also uses BiDirectional RNN (BiRNN) for Encoder.

BiRNN Encoder consists of forward and backward RNN. The forward RNN \(\vec f\) reads the input sequence in order (from \(x_{1}\) to \(x_{T}\)) and provides forward hidden states i.e, \(\vec h_{1},\cdots,\vec h_{T}\).

The backward RNN \(f\) reads sequence in reverse (from \(x_{T},\cdots,x_{1}\)) providing sequence of backward hidden states \(\overleftarrow{h_{1}}, \cdots,\overleftarrow{h_{T}}\).

This way we obtain the annotation for each word \(x_{j}\) by concatenating the forward hidden and backwards hidden states i.e, \(h_{j} = [\overrightarrow h_{j} ; \overleftarrow h_{j}]^T\) (Bahdanau et al., 2014).

Each annotations \(h_{i}\) obtained this way contains information about the whole input sequence with a strong focus on the parts surrounding the \(i^{th}\) word of the input sequence.

The context vector \(c_{i}\) is then computed as a weighted sum of these annotations \(h_{i}\) as :

\[c_{i} = \sum\limits_{j=1}^{T_{x}} \alpha_{ij} h_{j} \notag\]and, the weight of each annotation $h_{j}$ is computed as :

\[\begin{equation} \alpha_{ij} = \frac{exp(e_{ij})}{\sum_{k=1}^{T_{x}}(exp(e_{ik}))} \end{equation}\]where, \(e_{ij} = a(s_{i-1},h_{j})\) is an alignment mode which scores how well the inputs around position \(j\) and the ouput at position \(i\) match. Now, the Decoder part - Bahdanau model Decoder, in comparison with eq(3) define each conditional probability as :

\[p(y_{i} \lvert y_{1},...,y_{i-1},X) = g(y_{i-1},s_{i},c_{i}) \notag\]where \(s_{i}\) is an RNN hidden state for time $i$ computed by :

\[s_{i} = f(s_{i-1},y_{i-1},c_{i}) \notag\]This way Bahdanau model achieve significantly improved translation in comparison with conventional Encoder-Decoder, and especially in the cases of longer sentences.

Attention Mechanism and Transformers

Transformers architecture is based on Encoder-Decoder, but uses Attention Mechanism. Lets first discuss Attention Mechanism and key things in it.

Like visual world, Attention Mechanism is two component framework, here :

volitional cues i.e., queries

nonvolitional cues i.e., keys

Interaction between queries and keys results Attention Pooling, and based on kind of it, we aggregate values(sensory inputs) to generate output.

Non-parametric Attention Pooling

Given a input-output pair \({(x_{1},y_{1}),\cdots,(x_{n},y_{n})}\) if we have to find \(f(x)\) for new input \(x_{n+1}\), then with Nadaraya-Watson Kernel Regression, it can be computed by (if \(K\) is kernel)

\[\begin{equation} f(x) = \sum\limits_{i=1}^n \frac{K(x - x_{i})} {\sum\limits_{j=1}^n K(x- x_{j})} y_{i} \end{equation}\]This can be further simplified as :

\[f(x) = \sum\limits_{i=1}^{n} \alpha(x,x_{i}) y_{i}\]Here, \(x\) is query and \((x_{i},y_{i})\) is key-value pair.

Eq(6) is Attention Pooling that provides weighted avergae of output \(y_{i}\) by Attention Weight i.e, \(\alpha(x,x_{i})\). To get better intuition on Attention Pooling, lets plugin Gaussian kernel in above equation :

\[\begin{align} f(x) &= \sum\limits_{i=1}^n \, \alpha(x,x_{i})\,y_{i} \notag\\ &=\sum\limits_{i=1}^n \frac{exp(-\frac{1}{2}(x-x_{i})^2)}{\sum_{j=1}^{n} exp(-\frac{1}{2}(x - x_{j})^{2})}y_{i} \notag\\ &=\sum\limits_{i=1}^n \text{softmax} (-\frac{1}{2}(x - x_{i})^2 ) y_{i} \notag\\ \end{align}\]This is non-parametric Attention Pooling, we can see that a key \(x_{i}\) closer to query $x$ will be given more attention with large attention weight.

Parametric Attention Pooling

Parametric method is just slightly different from the non-parametric. Here, the distance between the query \(x\) and key \(x_{i}\) is multiplied by a learnable parameter \(w\). Thus, \(f(x)\) can be expressed as :

\[f(x) = \sum\limits_{i=1}^n \text{softmax} (-\frac{1}{2}((x -x_{i})w)^2)y_{i} \notag\]Attention Scoring Function

Exponent of Gaussian Kernel is said to be Attention Scoring Function. In any type of attention pooling, it maps two vectors to scalar, and when computed by softmax operation, it provides attention weight for the query \(x\) and key \(x_{i}\).

If we have a query \(q\) and \(n\) key-value pairs \((k_{1},v_{1}, \cdots,(k_{n},v_{n}))\), then attention pooling is computed as:

\[f(q(k_{1},v_{1}),\cdots,(k_{n},v_{n})) = \sum\limits_{i=1}^n \alpha(q,k_{i})v_{i} ∈ \mathbb{R}^v \notag\]where attention weight is computed as :

\[α(q,k_{i})= \text{softmax} (a(q,k_{i})) = \frac{ \text{exp}(a(q,k_{i}))}{\sum_{j=1}^n \text{exp}(a(q,k_{j}))} ∈ \mathbb{R}^v \notag\]We can observe that different value of attention scoring function i.e, \(a\) can lead to different attention weight, and thus different output for attention pooling. Two popular attention scoring function are :

Additive Attention

Additive Attention is used as scoring function when queries and keys are vectors of different lengthhs. If we have query \(q ∈ \mathbb{R}^q\) and a key \(k ∈ \mathbb{R}^k\), the additive attention is expressed as :

\[a(q,k) = w_{v}^T \, \text{tanh} (W_{q}q + W_{k}k) ∈ \mathbb{R} \notag\]where learnable parameters \(W_{q} ∈ \mathbb{R}^{h × q},W_{k} ∈ \mathbb{R}^{h × k}\) and \(w_v ∈ \mathbb{R}^h\). Here, \(h\) is hidden units number.

Scaled Dot-Product Attention

Dot-product is used when we have both the query and key of similar vector length \(d\). It is further scaled up so that the variance of dot product still remain one by dividing with \(\sqrt{d}\). Scaled Dot-Product Attention scoring function is then :

\[a(q,k) = \frac{q^T k}{\sqrt{d}} \notag\]If we have value as \(V\), the attention weight thereafter can be written as :

\[\text{softmax} (\frac{Q K^T}{\sqrt{d}})V \notag\]Multi-Head Attention

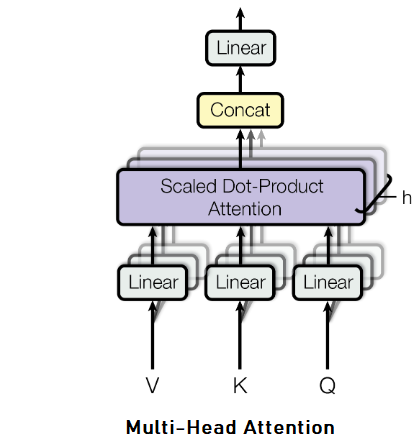

Multi-Head Attention allows the attention function to extract information from different representations. It linearly projects the queries, keys and values \(h\) time, each time using a different learned projection to \(d_{k},d_{q}\) and \(d_{v}\) dimensions.

Then, on each of these projects, we implement Scaled Dot-Product Attention function to gain \(d_{v}\) dimension output.

These, afterwards gets concatenated and projected again to provide final result. It can be expressed as :

\[\text{MultiHead} (Q,K,V) = \text{concat} (\text{head}_{1},\cdots,\text{head}_{h})W^{o} \notag\]where

\[\text{head}_{i} = \text{Attention} (QW_{i}^Q, KW_{i}^K, VW_{i}^V) \notag\]Here, the projections are parameter matrices \(W_{i}^Q ∈ \mathbb{R}^{d_{\text{model}} × d_{k}}, W_{i}^K ∈ \mathbb{R}^{d_{\text{model}} × d_{k}}, W_{i}^V ∈ \mathbb{R}^{d_{\text{model}} × d_{k}}\), and \(W^{o} ∈ \mathbb{R}^{hd_{v} × d_{\text{model}}}\)

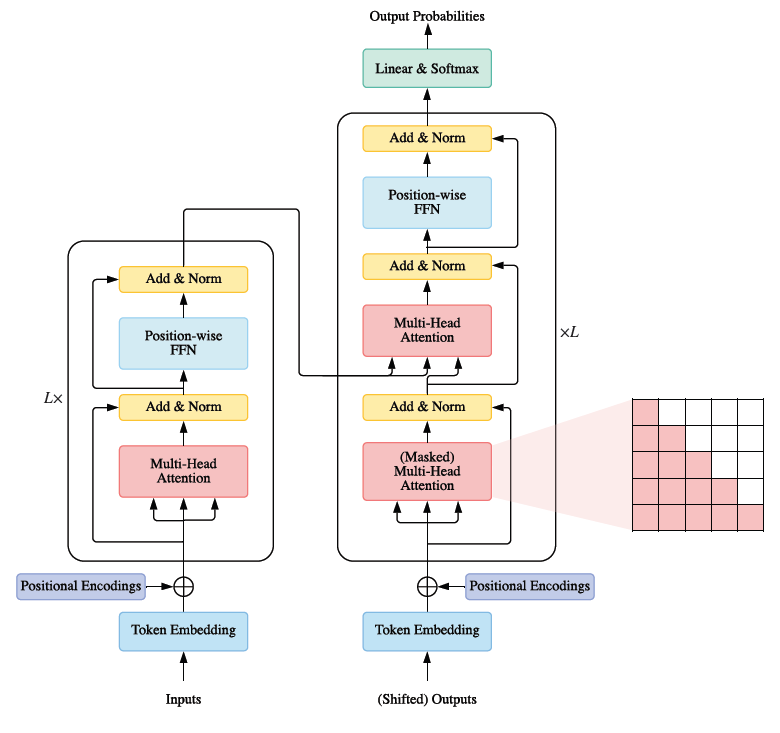

Transformers

Transformers consists of an Encoder-Decoder pair, where each of them is a stack of $L$ identical blocks. Encoder block is composed of Multi-Head attention and a position-wise feed-forward network (FFN). In addition, a residual connection, followed by Layer Normalization around each module. Decoder block, on the other hand, additionally include cross-attention modules between Multi-Head attention and position-wise FFNs. Transformers architecture can be seen below :

There are three types of attentions used here in Transformers :

- Self-Attention It is when queries, keys, and values are generated from the same sequence.

- Cross-Attention It is between encoder and decoder, and opposite of self-attention. Here, keys and values are generated by a different sequence than queries.

- Masked Multi-Head Attention In transformer decoder side, we apply a mask function to the unnormalized attention matrix \(\displaystyle \hat{A} =\text{exp} ∣ \frac{QK^T}{\sqrt{D_{k}}}\), where the illegal positions are masked out by setting \(\hat{A_{ij}} = - \infty\) if \(i \le j\).

Position-Wise FFN : The position-wise FFN is a fully connected feed-forward module that operates separately and identically on each position

\[\text{FFN(H')} = \text{ReLU}(H'W^1 +b^1)W^2 +b^{2} \notag\]Position Encoding : Since Transformers do not have recurrence or convolution, it doesn’t really know the which word is at which position. Therefore, position needs to be encoded. One way is to append sine and cosine waves with different frequencies with word vector. This helps transformer to learn the word position because each position have unique combination of values.

References

- Cho, K., Van Merriënboer, B., Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H. and Bengio, Y., 2014. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078.

- Sutskever, I., Vinyals, O. and Le, Q.V., 2014. Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27.

- Bahdanau, D., Cho, K. and Bengio, Y., 2014. Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473.